As AI moves from experimentation into production environments, a critical architectural decision emerges: Should you use a SaaS AI gateway, or deploy an enterprise (self-hosted) AI gateway?

This decision is no longer limited to engineering teams. It affects product velocity, cost control, security posture, and long-term scalability. Understanding the difference early helps teams avoid rework, bottlenecks, and premature complexity.

This article breaks down the core concepts, trade-offs, and decision criteria, so you can evaluate options clearly, without committing to a specific solution.

What Is an AI Gateway?

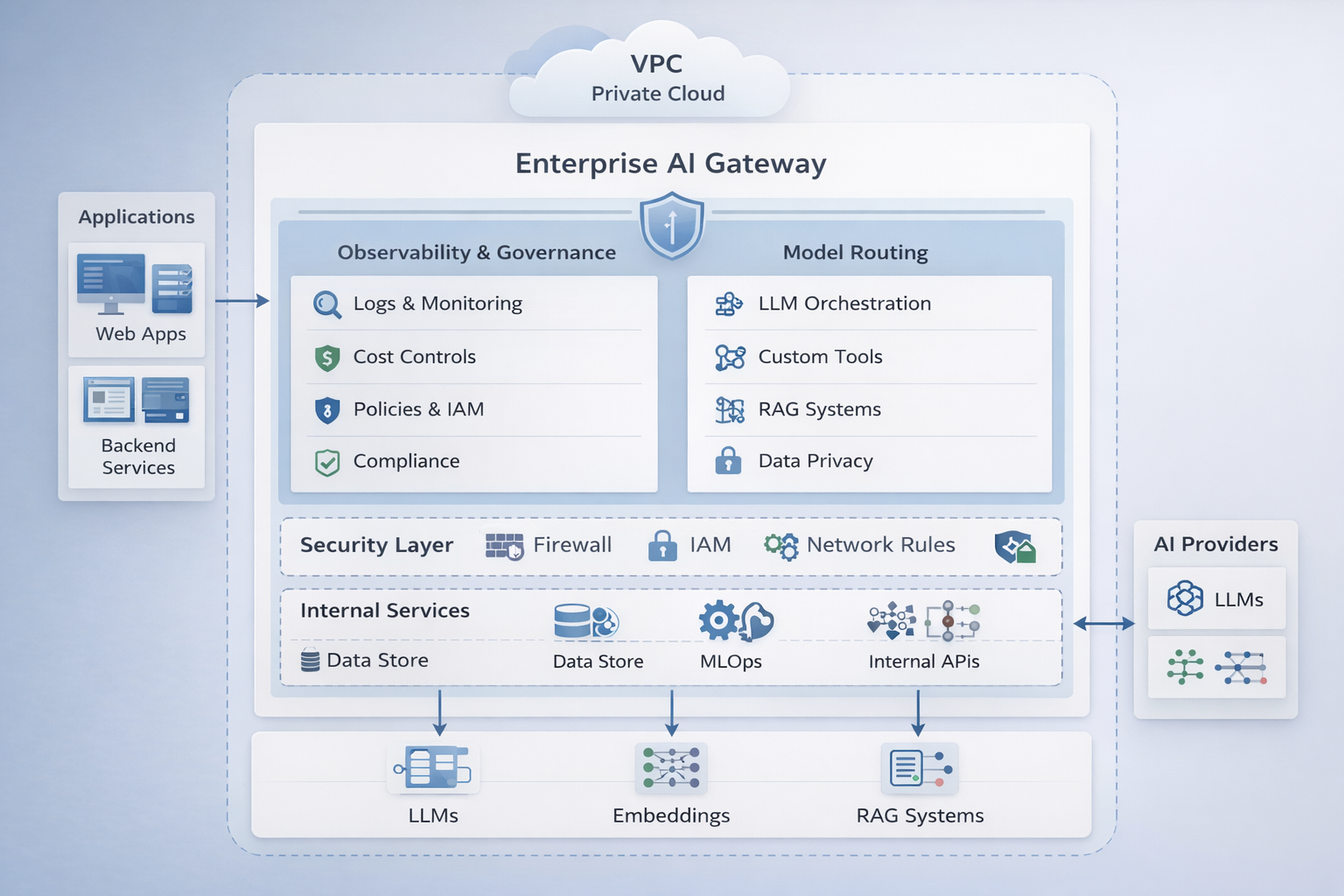

An AI gateway serves as an intermediary layer between your applications and AI models, including large language models (LLMs), embedding models, or RAG systems.

At a high level, AI gateways help teams manage:

- Model routing across providers

- Cost, token, and usage tracking

- Logs, traces, and debugging

- Access control and rate limits

- Experimentation and versioning

As AI usage grows, this layer often becomes the control plane for accessing, governing, and scaling AI across the organization.

Why the SaaS vs. Enterprise Question Matters

Early AI implementations often start small, one model, one team, one use case. But success brings complexity:

- Multiple AI providers

- Higher traffic and costs

- More teams shipping AI features

- Increased security and compliance concerns

At this stage, teams must decide whether they want a managed, cloud-based gateway or a self-hosted, enterprise-grade setup.

SaaS AI Gateways: Built for Speed and Learning

What They Are

SaaS AI gateways are fully managed platforms hosted by a vendor. Teams integrate using APIs or SDKs and start routing AI traffic quickly.

Typical Characteristics

- Cloud-hosted and vendor-managed

- Quick onboarding and setup

- Built-in observability and usage dashboards

- Minimal infrastructure responsibility

When Teams Choose SaaS AI Gateways

SaaS AI gateways are often a good fit when:

- Teams are validating AI use cases.

- Speed and iteration matter more than deep customization.

- Engineering bandwidth is limited.

- AI usage patterns are still evolving.

Common Trade-Offs

- Limited control over infrastructure and deployment environment

- Constraints around data residency and custom security policies

- Costs may grow as usage scales.

Enterprise AI Gateways: Built for Control and Scale

What They Are

Enterprise AI gateways are deployed and operated within your own environment, such as a private cloud, VPC, or on-premise infrastructure.

Typical Characteristics

- Full control over data flow and storage

- Custom security, IAM, and networking policies

- Integration with internal platforms and tooling

- Greater operational responsibility

When Teams Choose Enterprise AI Gateways

Enterprise setups are commonly adopted when:

- AI is core to mission-critical workflows.

- Sensitive or regulated data is involved.

- Predictable performance and latency are required.

- Organizations operate at a large scale.

Common Trade-Offs

- Longer setup and deployment timelines

- Higher operational overhead

- Requires experienced platform or MLOps teams

SaaS vs. Enterprise AI Gateways: A High-Level Comparison

How Teams Typically Evolve

In practice, teams rarely commit to a single integration or deployment approach forever.

A common pattern looks like this:

- Start simple to ship AI features quickly.

- Learn from real usage costs, performance, and risks.

- Introduce more control as AI becomes business-critical.

Understanding both SaaS and enterprise models early allows teams to design architectures that evolve gracefully, rather than forcing disruptive changes later.

Key Questions to Ask Before Choosing

If you’re evaluating AI gateway approaches, these questions can help guide early thinking:

- How critical is AI to our core product today?

- What level of data sensitivity are we dealing with?

- Do we need speed now, or control later or both?

- What operational expertise do we have internally?

There’s no universally “correct” answer, only what fits your current stage and future direction.

Final Takeaway

AI gateways are becoming foundational infrastructure for modern AI-powered products.

- SaaS AI gateways prioritize speed, simplicity, and fast learning.

- Enterprise AI gateways prioritize control, compliance, and scale.

For teams early in their AI journey, understanding these trade-offs is the first step toward making informed, future-proof decisions, long before vendor selection comes into play.

The teams that think about AI gateway architecture early are the ones that scale without friction later.

.png)

.svg)

.png)